PDF(760 KB)

PDF(760 KB)

PDF(760 KB)

PDF(760 KB)

PDF(760 KB)

PDF(760 KB)

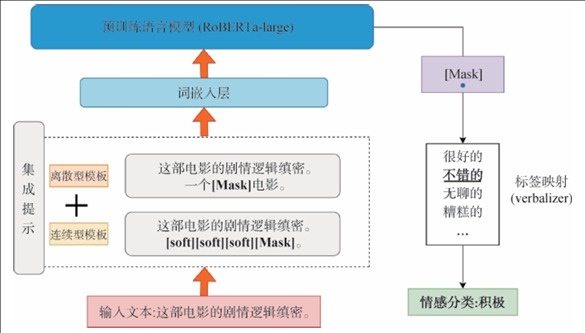

基于提示学习增强的文本情感分类模型

({{custom_author.role_cn}}), {{javascript:window.custom_author_cn_index++;}}

({{custom_author.role_cn}}), {{javascript:window.custom_author_cn_index++;}}Text Sentiment Classification Algorithm Based on Prompt Learning Enhancement

({{custom_author.role_en}}), {{javascript:window.custom_author_en_index++;}}

({{custom_author.role_en}}), {{javascript:window.custom_author_en_index++;}}

| {{custom_ref.label}} |

{{custom_citation.content}}

{{custom_citation.annotation}}

|

/

| 〈 |

|

〉 |